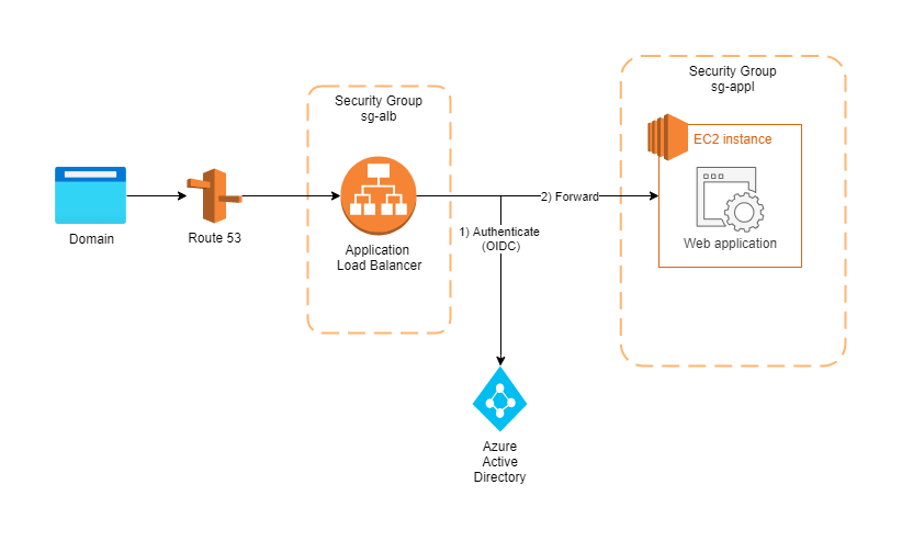

TL;DR: get an application load balancer, add an HTTPS listener (you will need a web domain and an SSL certificate for that), add a rule to the load balancer to first authenticate using OIDC, then forward to your site. Voila!

Imagine you have built a small web app including a web interface that you want to make available for all your colleagues. You would like to host it on Amazon Web Service (AWS). And of course, you want to make sure only your colleagues can access it! Let us also say that you want your users to log in via Single-Sign-On with their regular Microsoft accounts, which are part of your company’s Azure Active Directory.

If you have ever done research on the web about authentication on AWS, you will have noticed the seemingly infinite number of different ways to go, and of different tools to help you. You may have come across AWS Cognito, AWS SSO, AWS Amplify, AWS API Gateway, AWS Directory Service, …, plus you may have seen a myriad of complicated authentication flow diagrams about cookies, tokens et cetera. You will surely also have come across many proprietary websites that offer their own products and services in order to secure your website.

But it does not have to be so complicated. The rest of this article is going to show a convenient way of setting up SSO authentication using your Azure AD accounts via OIDC without writing a single line of code. The main inspiration for this came from this AWS blog post, but we will be trying to add a few details for clarity.

Step 0: Deploy your web app in AWS

Before starting to add authentication, get your web app up and running. In this article, we are going to assume the application is hosted on an EC2 instance, but of course, you may also use containers in EKS or other means.

Place the EC2 instance in a private security group – allow SSH connections (ideally only from your IP), but do not allow any TCP or other connections so far.

Step 1: Set up the Load Balancer

In the AWS EC2 dashboard, the left-hand menu should provide you with a “Load Balancing” menu. Under the subitem “Load Balancers”, go ahead and create a new load balancer. Choose “Application Load Balancer” (ALB) as its type. Choose “internet-facing” and add an HTTPS listener.

Note that you are going to need at least two availability zones in your VPC for the load balancer to be created. If you do not have this, go to your VPC and add a subnet with a different availability zone.

On the “Configure Security Settings” side, it will ask you to provide an SSL/TLS certificate and offers to take you to the AWS Certificate Manager (ACM). Follow the link and create a certificate. Note that you must have a web domain for this purpose – one for which you have control over the DNS configuration. A public IP and DNS name of an EC2 instance (….compute.amazonaws.com) does not work! Note also that you can create SSL certificates elsewhere, too, e.g. on letsencrypt.org, then add it to ACM.

Place the ALB in a new security group of its own – one that allows inbound access via HTTPS on port 443 from all addresses.

Under “Configure Routing”, you will need to create a “Target Group” that includes your web application – i.e. your EC2 instance along with the port that the application is exposed on. Select HTTPS or HTTP, depending on how you are exposing your application.

Step 2: Configure authentication and forwarding in the load balancer

Choose your newly created load balancer in the EC2 load balancing menu and go to tab “Listeners”. Add a new listener: set it to listen for all traffic on port 443. As default action, choose “Authenticate” and then “OIDC”. Now you will see a lot of fields to be filled (Issuer, Authorization endpoint, …) – and this is when you need to jump over to your OIDC provider. In our case, our company’s Microsoft Azure Active Directory.

In your Azure portal, find the Azure Active Directory. In the menu bar to the left, find the entry “App registrations” and start a new registration. This is where you can define who will be able to access your app, for example only accounts from your organisation. Important: fill the optional “Redirect URI” with https://<your_sites_domain>/oauth2/idpresponse (see also here).

Once the app is registered, its page in the Azure AD will provide you all the endpoints and information you need to complete the “Authenticate” rule in your load balancer.

Once complete, add a second action underneath: “Forward” to your EC2 instance.

Step 3: Allow the load balancer to talk to your EC2 instance.

You’re almost there – now we just revisit the security group settings for your EC2 instance and add a rule for incoming HTTPS traffic only from our new load balancer. Or, for convenience, select the security group of the load balancer (assuming you have only the load balancer in it!). As a port, select the port that your web application is exposed to.

Step 4: Test

When you now enter your web domain in a browser, you should be redirected to a Single-Sign-On page by Microsoft (or the OIDC provider you chose). Incorrect or invalid credentials should give you an error message, correct credentials should lead you to be able to see your application. Congratulations!

Final remarks

You now have a deployed version of your application, protected by a powerful authentication mechanism, including multi-factor authentication or similar if those are set up in your authentication provider.

I hope you have found this way of achieving this convenience! Using a load balancer in front of the application can of course provide other benefits, too. Should you ever scale your application to run on multiple machines, the load balancer can follow its real calling. But even with only one application instance running behind it, it has the advantage of handling all authentication-related interactions with clients, while straining your application’s EC2 instance only with application-related requests. Just note that Load Balancers do cost money (check https://calculator.aws/#/) and that they cannot be turned off – so if you intend to have your application or EC2 instance running only on demand, consider creating an Infrastructure-as-Code stack in CloudFormation or Terraform to create your load balancer swiftly.

Feel free to reach out and let me know what you think!